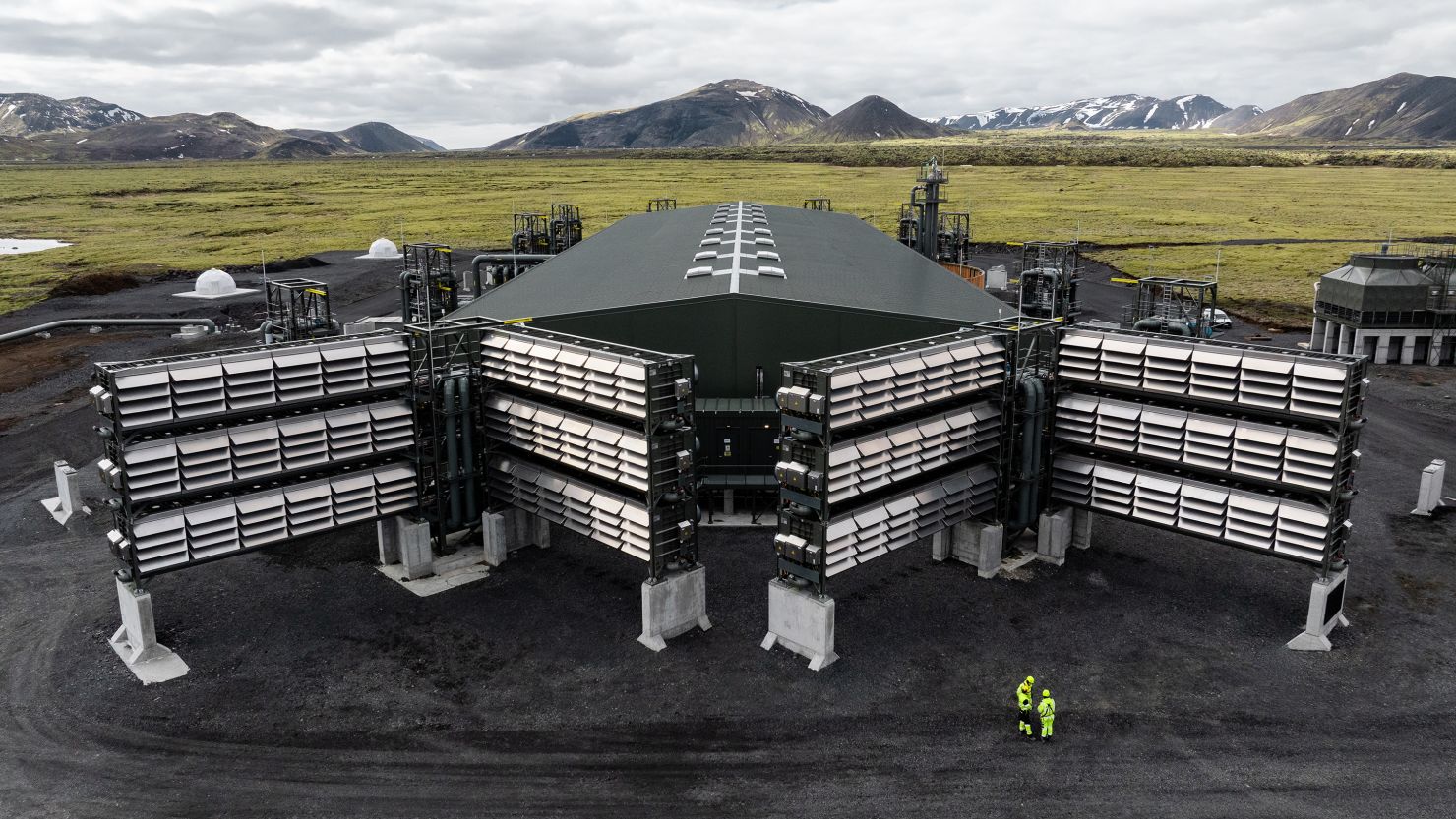

Artificial intelligence (AI)? Large amounts of data, often personal or private, being ingested, analysed, combined and assembled into new forms with generally ambiguous collection and purposes.

Three adjectives here: personal, new, and mysterious.

And three worries.

Is personal data protected?

Do the new forms violate intellectual protection or do they benefit from it?

Who is responsible when an AI solution makes a harmful decision?

These are just some of the issues.

AI involves “business risks, reputational risks, and legal risks,” says MH Paul Gagnon, Co-Leader of Technology and AI at BCF Business Lawyers.

His colleague Misha Benjamin adds: “A great example of how these three things can happen at the same time: internal chatbots, which are very popular.”

What some call in French a conversational or dialogue agent.

We take ChatGPT, put it internally, and expose it to our data. If I work at a consulting engineering firm and want to know how to solve a problem, instead of talking to three of my colleagues, I can ask a chatbot.

Misha Benjamin, Co-Head of Technology and AI at BCF Business Lawyers

The conversational agent will prepare a summary of the information the company already holds, create links to documents relevant to the topic, and perhaps name people with expertise in that area.

“Very useful. But if we do not clearly define the management of the matter and do not plan our project accordingly, I can also ask him what is the salary of my corresponding colleague, or who has been reprimanded by HR. On the legal level, in terms of privacy protection, we can see the problems. »

Current law

Because artificial intelligence did not appear in a society without guarantees.

“There are a lot of existing laws that provide a legal framework for AI even before we adopt laws that will be specific to AI,” recalls M.H Eric Lavallee, head of the Lavery Law AI Lab.

“We already had it in Quebec Law on the legal framework for information technology, which to some extent regulates artificial intelligence or its use by companies. »

Labor law is also in question.

“If we use an AI tool to make hiring decisions or disciplinary decisions, we recognize that there is bias there, and the existing laws are what will apply to that, and we don't need a new law to tell us that we will be accountable,” says Misha Benjamin.

Moreover, the massive emergence of generative artificial intelligence – capable of generating text or images, for example – raises new issues regarding intellectual property.

We can use AI as a tool according to existing laws and create something that we still own. But it is possible that we can use AI to invent things that we will not 100% own, depending on the agreement with the person who makes the AI available to you.

Misha Benjamin

New legal frameworks

To confront these unprecedented challenges, new legal frameworks are being built.

Last March, the European Parliament adopted what is intended to be the world's first law on artificial intelligence, which aims to ensure security and respect for fundamental rights while encouraging innovation.

“In certain contexts, depending on the risks involved, obligations are created, for example public disclosure or risk analysis,” describes Paul Gagnon. In Canada, Bill C-27, currently under consideration, provides the same type of risk analysis regarding the development of artificial intelligence systems. »

These legal frameworks, both existing and new, define the standards of practice and precautions that companies must now take in their use of AI.

“We are specifically here to help people start building this strength – how to manage projects properly, how to manage AI in a company – so that it develops well when the laws are applicable,” explains Mischa Benjamin.

Companies can already start practicing.

“Music guru. Incurable web practitioner. Thinker. Lifelong zombie junkie. Tv buff. Typical organizer. Evil beer scholar.”